In 2016, The Chinese University of Hong Kong published a very large set of images of celebrities, along with an exhausting set of annotations, including commonplace and objective measures like “what color is the person’s hair?” and “is the person wearing a hat?” as well as subjective and even nebulous things like “does the person have a 5-o-clock shadow?” and even “is the person attractive?”. I think the data set is designed to encourage the development of machine-learning algorithms for things like facial recognition, but one of my professors was curious about a slightly different measure – would it be possible to merge all 200,000 images into a single, representative face? As it turns out, the answer is yes, it’s relatively simple, and the results are sort of interesting. First, what does the average celebrity face look like? Well, something like this:

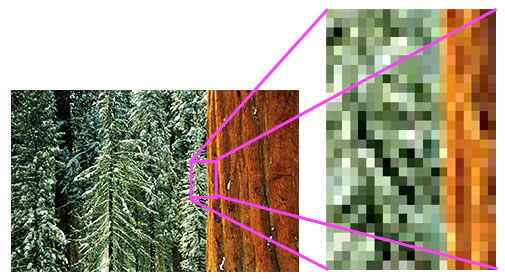

To be honest, I didn’t expect the result to look like a face at all, but it actually does look like it could be a photo of a real person – blurry and washed-out, and a little uncanny valley-ish, but believable. Here’s how this was created. Every photo is made up of tiny blocks, called pixels, and each pixel has a color. Here’s a great visualization of what that looks like from ultimate-photo-tips.com.

Basically, if you look closely enough at any digital image, you’ll be able to see that it’s made up of little blocks of color. Each of those colors is actually made of three parts – a red, blue, and green component and each has a numerical value between 0 and 255 which determines how much it contributes to the overall color of the block. For example, it’s easy to see the heavy red influence in the first color below, and the heavy blue influence in the second.

Red: 255, Green: 52, Blue: 52

Red: 30, Green: 69, Blue: 227

Why does this matter? Well, thankfully, all of the celebrity images were prepared so that they have exactly the same dimensions, and therefore the same number of pixels, aligned exactly. This makes averaging them a simple exercise of collecting the Red, Green, and Blue components of each pixel, and averaging the numerical values over all of the images. If you’re the kind of nerd who likes to read code, here’s one way to accomplish that. If you’d rather just enjoy the show, here’s the same method applied to a few subsets of the images:

There are clearly some biases in the images chosen. For instance, the average face appears to be more female than male and that’s because about 58% of the faces are indeed female. It’s hard to say whether this bias is on the part of the people preparing the data set or because celebrity culture in general favors females, but it’s clearly there. What other observations can you make?